Swarm Choreography

Pytorch, Python, C++, CNN, Computer Vision, Multi Agent System

Project Overview

This performance explores the evolving relationship between a human dancer and a swarm of robots through a machine learning gesture classification model that interprets the dancer’s effort qualities and a choreographic swarm system that translates those interpretations into collective robotic behavior from decentralized control laws.

System Overview

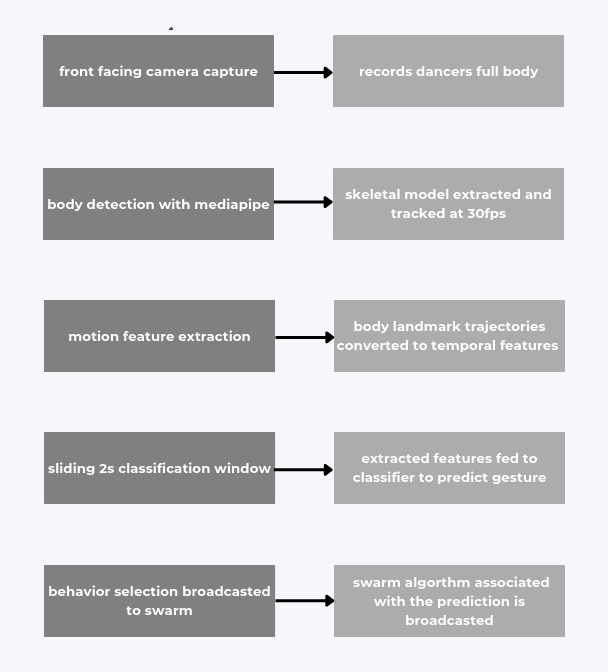

The system transforms the dancer’s movement into real-time swarm behavior by capturing video from a front-facing camera, extracting body landmarks with MediaPipe, and classifying each 2-second movement window using a Laban-inspired Random Forest model. Each predicted movement quality then triggers a corresponding algorithm on the Coachbot swarm, allowing the robots to collectively express that movement quality through distinct, choreographed swarm algorithms.

The Coachbot Platform

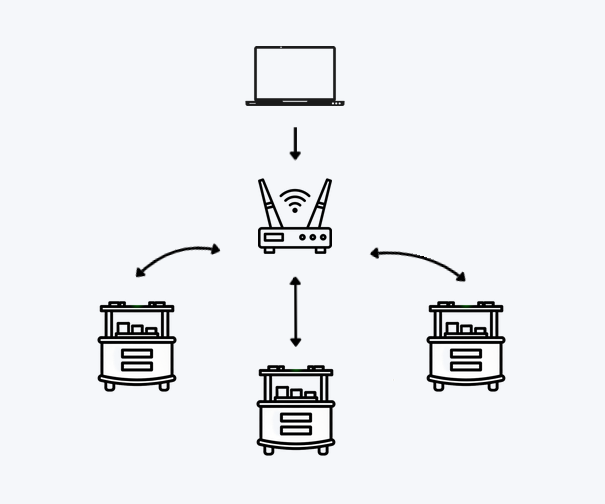

The performance uses Coachbot V2.0 mobile robots developed at Northwestern for large-scale swarm experiments. The performance uses 12 bots, each robot is approximately 12 cm tall with a 10 cm diameter and each bot locally communicates using the HTC Vive tracking framework.

The larger research arena includes an HTC Vive base station for global localization and an overhead camera used for recording.

Coachbots communicate through a layered network consisting of:

- an Ethernet link from the base station to a Wi-Fi router

- TCP/IP connections between the router and robots

- layer-2 broadcast messages for fast swarm-wide coordination

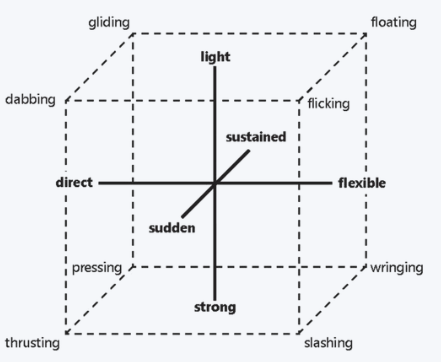

Laban Movement Overview

Laban Movement Analysis (LMA) is a framework used across dance, choreography, and somatic practice to describe and understand human movement. Developed by Rudolf Laban and later expanded by Irmgard Bartenieff, the system organizes movement into four interrelated categories:

Body — how different parts of the body participate in the action

Effort — the dynamic qualities of a movement: its energy, texture, and the manner in which it unfolds

Shape — how the body changes form and what motivates those changes

Space — the pathways and directional choices a movement traces through the environment

Laban combined Space, Weight, and Time into what he called the Effort Actions or Action Drive. These combinations create eight named efforts: Float, Punch (Thrust), Glide, Slash, Dab, Wring, Flick, and Press.

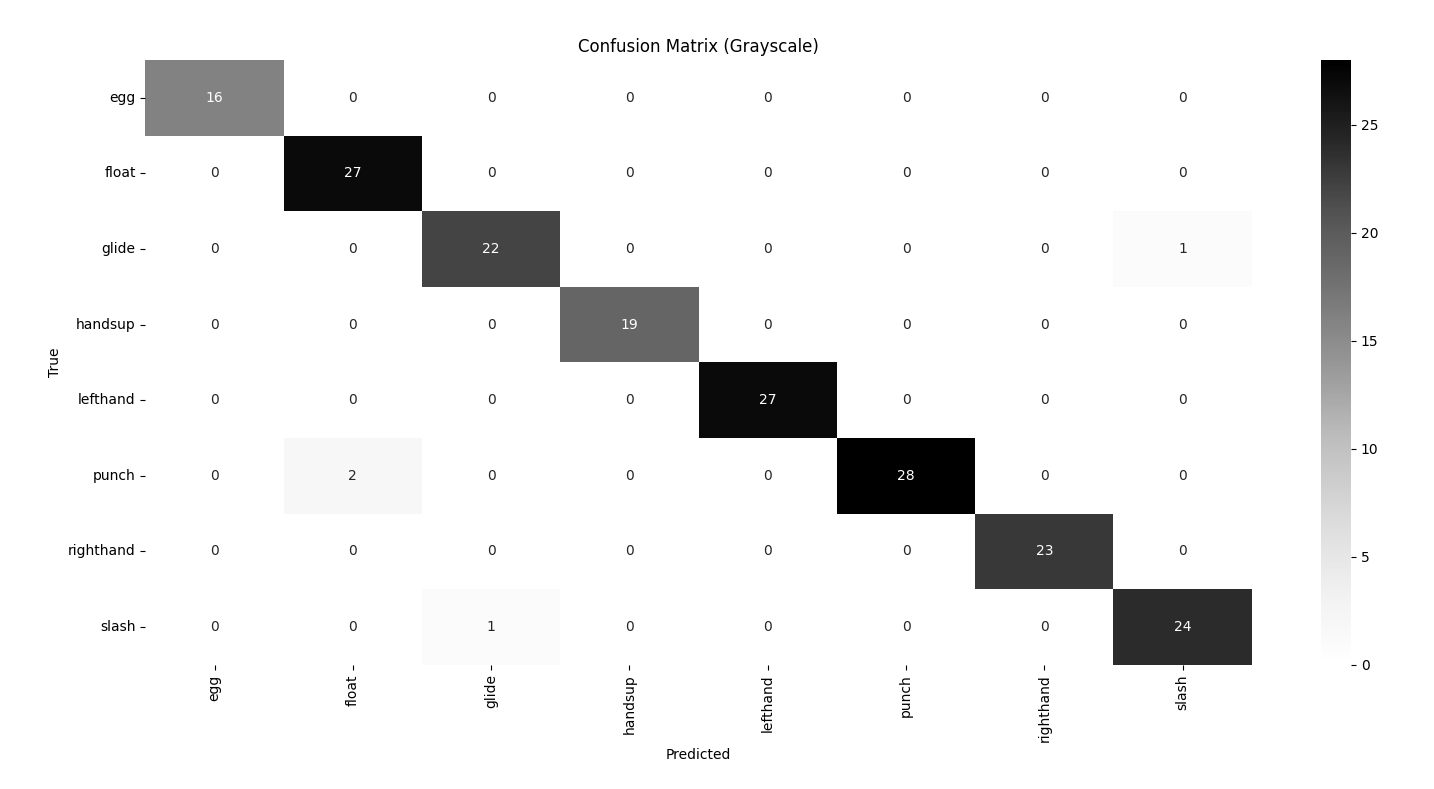

Laban Gesture Classifier

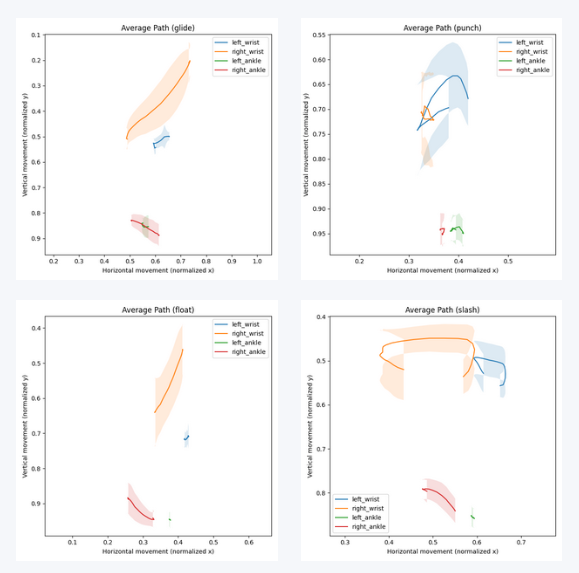

The gesture classifier focuses on four of the eight Effort Actions— Float, Punch, Glide, and Slash—each selected for their expressive contrast and their ability to be embodied clearly by both human and robot movement. (Note: The first four gestures appear in the performance as choreographic elements and are not themselves derived from Laban analysis.)

Using the Laban Effort Action Method, the dancer performed roughly 80 phrases for each of the four efforts, providing the classifier with consistent, intentional examples that captured both gesture form and expressive quality.

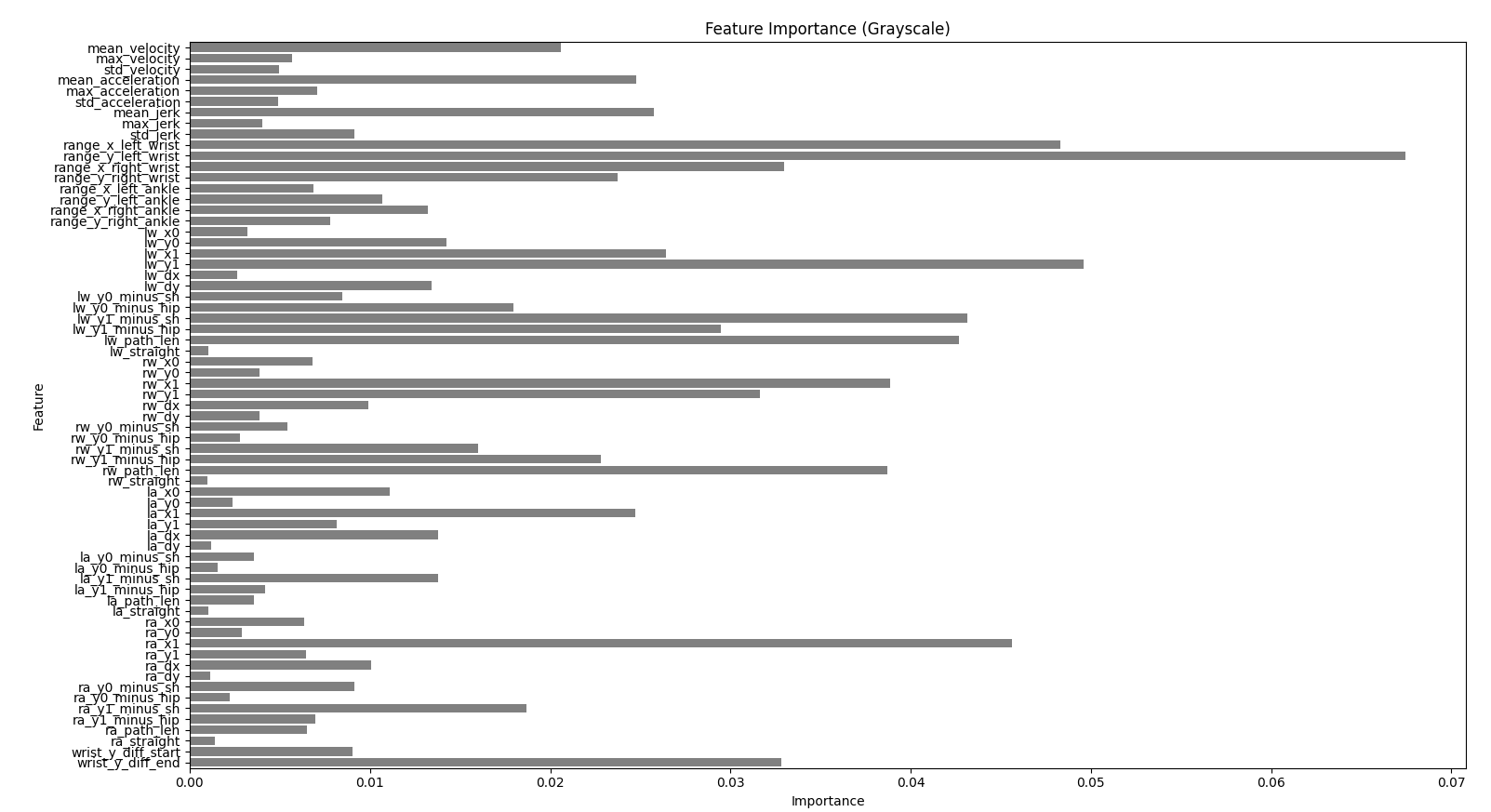

Each phrase was represented as a feature vector including:

- Kinematic features (velocity, accel, jerk)

- Spatial features (ranges, positions, deltas)

- Geometric features (path length, straightness)

- Normalization features (relative to hip/shoulder)

The resulting random forest classifier successfully learned distinguishing patterns across the four efforts. Float often appears as low-velocity motion with gentle directional variability, while Punch is characterized by sharp velocity spikes and strong directional commitment. Glide maintains smooth, sustained timing with direct spatial pathways, whereas Slash shows quick, forceful movement with more spatially indirect trajectories.

During performance, the classifier runs continuously. Every two seconds, it outputs a predicted effort label, which then triggers a corresponding swarm behavior. In this way, the dancer’s movement becomes a real-time choreographic score, dynamically shaping how the swarm organizes, disperses, or transforms its collective motion.

Swarm Algorithms

The swarm algorithms were designed as creative interpretations of Laban Effort qualities, translations of human expressive dynamics into fully distributed robotic behaviors. Each algorithm began in simulation, where considerations such as shape, directionality, flow, and effort quality guided both the control logic and the emergent group patterns.

Encircling, Float, and Slash are the three most complex and visually dynamic algorithms, each crafted to evoke a distinct movement quality while maintaining decentralized control across the swarm. Additional choreographed behaviors (Left. Right, Glitch, Punch, Glide, etc.) are documented on github but the following three form the expressive core of the system.

Performance

Gratitude

This project is rooted in a deep appreciation for human movement and expression. Choreographing this duet between dancer and robots has become an act of reverence for the subtle ways humans communicate through gesture, through motion, through shared attention and empathy.

Swarm behavior in nature offers such powerful examples of emergent coordination, care, and collective resilience. These systems are not just biologically interesting; they are politically meaningful, especially now. Studying swarm dynamics is, in its own way, a study of how groups survive, resist, and move together through constraint.

I also want to acknowledge the technologies and tools, including large language models, that have supported an enormous amount of learning and debugging throughout this project. None of this work exists in isolation. It emerges from a network of resources, labor, and knowledge, human and nonhuman that I hold with deep gratitude.

I want to honor that network here: Thomas DeFrantz, whose work grounds this project in practices of liberation through movement. Matt Elwin, my project advisor in the MSR program, whose robotics expertise and care for students’ growth shaped this journey. Vaishnavi Dornadula, who granted me access to the Coachbot platform she built and maintains. Emma Bernstein, who held a constant light to my love for this work. Eden Naftali and Sam Mayers, who held my hand as I moved toward challenges. Lily Sharatt, for her creative being and the composition that accompanies the recording. My family, who remain constant supporters of everything that brings me joy. And my entire cohort, whose curiosity continues to inspire me, especially Pushkar, my desk mate.

Inspiration

Early inspiration for this project came from Studio Drifts Murmuring Minds at LUMA.

Github

Swarm Control Algorithms

Random Forest–driven swarm algorithms for the CoachBot platform

View on GitHub

Laban Effort Gesture Classifier

Random Forest classifier trained on embodied movement phrases

View on GitHub