Interactive Perception with Turtlebot

ROS2, Python, C++, Computer Vision, CNN, Path Planning

Project Overview

For my winter project in my robotics master’s program, I created a symbiotic dance between a TurtleBot3—a compact mobile robot—and a human dancer. The project uses computer vision to identify the colored markers of the dancer and turtlebot, enabling both the robot and human to respond dynamically to each other’s movements while collaboratively generating ephemeral maps of movement. The TurtleBot3 and dancer create visible traces of their movements, engaging in a dialogue about presence and belonging in space.

This piece investigates the interplay between spatial awareness, the concept of home, and human-robot collaboration in a creative context. Inspired by Shannon Dowling’s improvisational principles and Laban Dance Movement Analysis, this project blends performance art and robotics to explore themes of space.

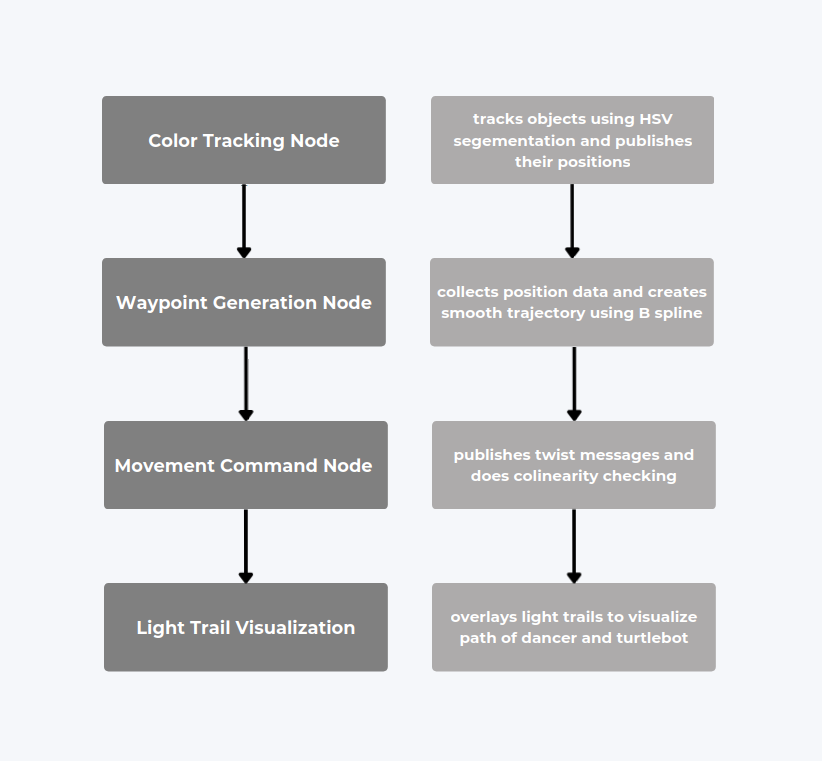

System Overview

The Turtlebots

The TurtleBot3 platforms are built on a modular robotic system equipped with a Raspberry Pi 3 and an OpenCR board, which provide computational power and motor control. The OpenCR board enables direct control of the TurtleBots’ dynamixel motors, while the Raspberry Pi handles higher-level processing and ROS2 communication.

Color Tuning and Tracking with RealSense

I implemented a computer vision pipeline for real-time object detection with YOLOV8 and HSV masking for fine-tuned color segmentation. I wrote a hsv color tuner to tune HSV values before each dance. Correctly tuning the HSV values was crucial to ensuring accurate object detection and path planning.

The dancer wore a pink colored LED marker, while the TurtleBots had its own distinct color-coded markers (green on its center and orange on its front). This setup enabled the system to identify and differentiate between movement patterns, allowing the robots to align with and follow specific trajectories in response to the trajectory of dancer’s movement.

![]()

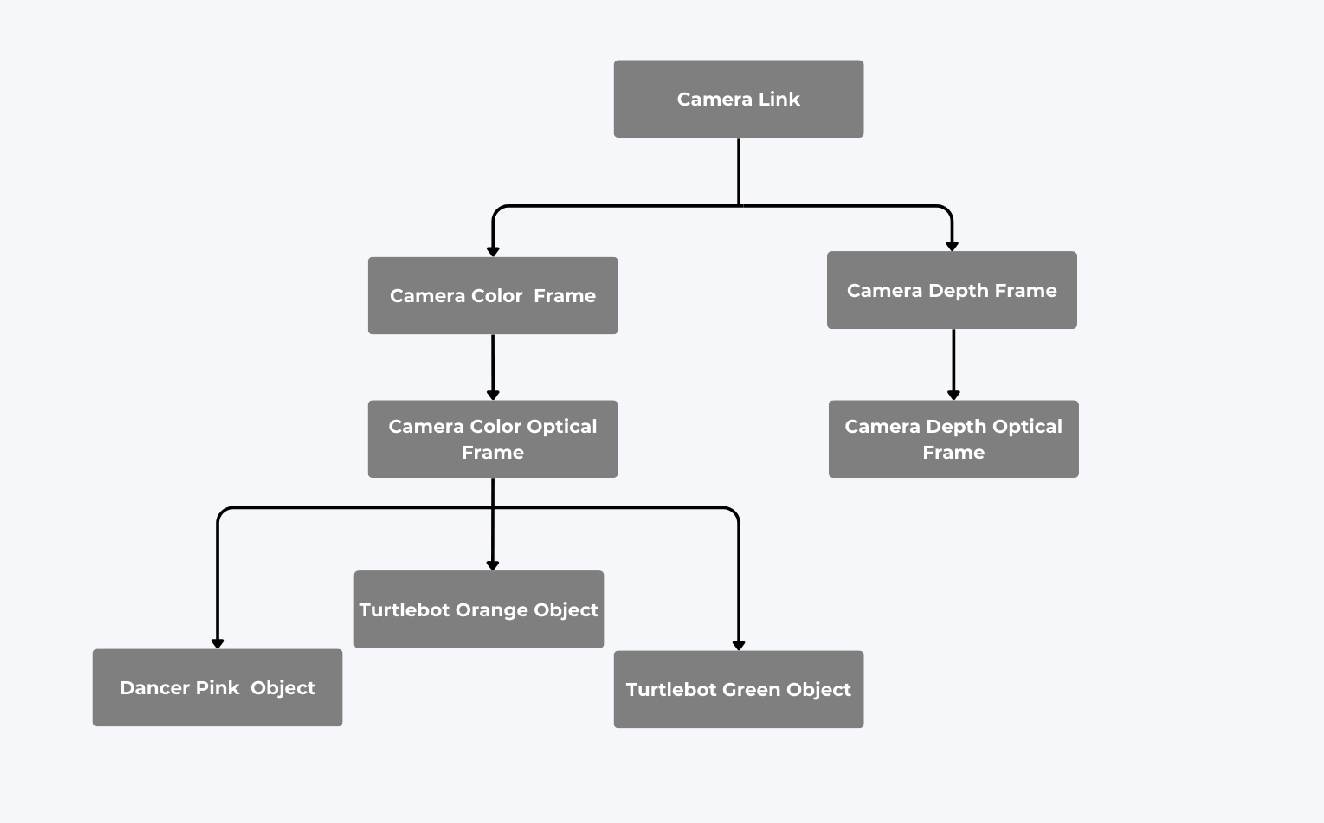

Frames and Transformations: Understanding the Space

A significant part of this project was setting up the correct frames of reference for the TurtleBot’s movement. Originally I had the realsense detecting x y z of the pink and green pixels. Then I converted those pixels to real world coordinates using camera intrinsic values in the frame of the turtlebot. I then had a transformation from the camera frame to the turtlebot frame and from the camera frame to the dancer frame. The dancer path was then transformed to the frame of the turtlebot. I originally thought the best way for the turtlebot to move to the correct points was in relation to its own frame. However, the odom values from the turtlebot were terribly inconsistent, so I abandoned this approach and resulted in using all objects with respect to the camera.

Waypoint Tracking and Conversion

Once I had an accurate way to detect position and movement in the correct frames, the next challenge was to create a smooth, navigable path for the TurtleBot to follow. I began by tracking the centroid of the detected dancer’s position and recording these points over time. Initially, I attempted to smooth the raw path data using a moving average filter, but this led to unintended distortions. Instead, I merged nearby contours to stabilize the centroid calculations and then implemented a B-spline algorithm to generate smooth waypoints.

To prevent redundant waypoints from being generated when the dancer stood still, I filtered out excessive points and set a time limit on path recording. The final system captures a 15-second window of motion and converts it into a sequence of waypoints that the TurtleBot can follow in order.

Turtlebot Orientation and Following the Path

I placed an additional color marker on the front of the TurtleBot. By detecting both the primary green central marker and the orange front marker, I computed the robot’s orientation relative to the camera and relative to its next waypoint. Using a collinearity check and ensuring the orange front marker was the closest part of the turtlebot, I determined the heading direction and adjusted it within the TurtleBot’s frame of reference. This allowed the robot to align itself correctly before moving along the path of generated waypoints in order .

After several iterations, I implemented a system in which :

- The dancer and turtlebot are detected in the cameras frame.

- The dancers’ movements are tracked for 15 seconds.

- The dancer’s movement is converted into a path.

- Smooth waypoints of the dancer’s path are generated using bspline.

- Turtlebot orients itself towards the first waypoint until colinearity is reached.

- Turtlebot moves to first waypoint and then continues through list of waypoints until completed.